We firmly believe that the most effective approach to comprehending the intricate mechanisms underlying any given system is to meticulously reconstruct it as it exists in nature. By harnessing the power of advanced computer technologies, we are actively engaged in building comprehensive models that mirror these systems, with a particular focus on animal perception and behavior. Our methodology is deeply rooted in psychophysical and ecological perspectives, allowing us to capture the nuances of how animals interact with and perceive their environment.

This computer-based approach offers unprecedented opportunities to simulate complex biological processes and test hypotheses in ways that were previously impossible. By creating virtual environments and digital organisms, we can manipulate variables and observe outcomes in a controlled, replicable manner. This not only enhances our understanding of existing biological systems but also allows us to predict and explore potential evolutionary pathways.

We are confident that this innovative methodology, which bridges the gap between biology and computer science, will pave the way for groundbreaking discoveries in the field of biology. As we continue to refine our models and expand our computational capabilities, we anticipate that this approach will become an indispensable tool for future biological research, offering new insights into the fundamental principles that govern life and behavior.

Research Projects

-

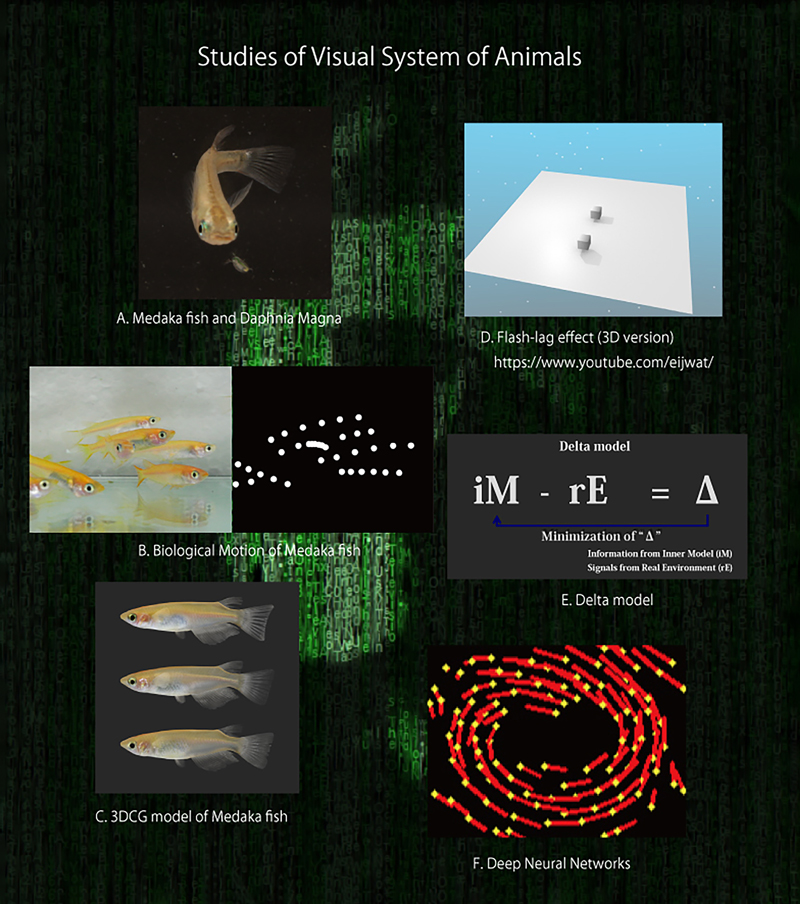

• Exploring visual information processing by constructing brain models that reproduce visual illusion perception using deep learning

-

• Exploring emotional psychology and color perception using large language models

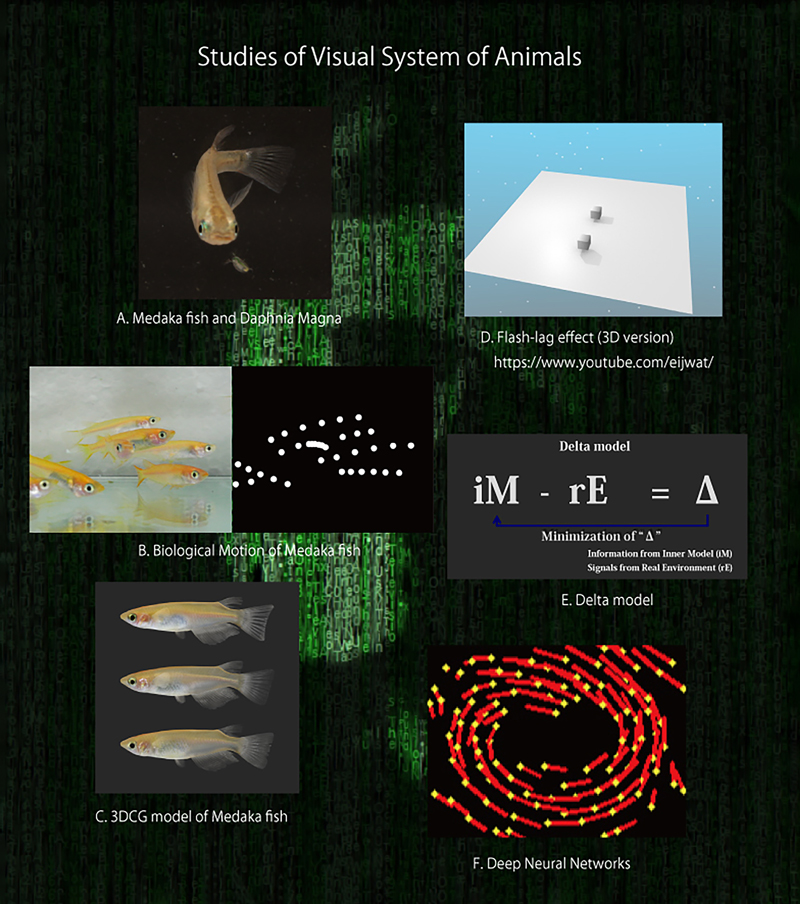

I. Psychophysical study of medaka fish

One of our major subjects is the psychophysical and computational study of medaka (

Oryzias latipes, Matsunaga & Watanabe, 2010). We have made progress in studies of prey-predator interaction using both these organisms and zooplankton. Visual motion cues are one of the most important factors for eliciting animal behaviors, including predator-prey interactions in aquatic environments. To understand the elements of motion that cause such selective predation behavior, we used a virtual plankton system where we analyzed the predation behavior in response to computer-generated plankton. As a result, we confirmed that medaka exhibited predation behavior against several characteristic movements of the virtual plankton, particularly against a swimming pattern that could be characterized as a pink noise motion (Matsunaga & Watanabe, 2012). As a side-story, we analyzed the swimming behaviors of adult water flea

Daphnia magna, and found apparent sexual differences: laterally biased diffusion of males in contrast to the nondirectional diffusion of females (Toyota

et al., 2022).

Many fish species are known to live in groups, and visual cues have been shown to play a crucial role in the formation of shoals. By utilizing biological motion stimuli, which in this case was the depiction of a moving creature by using just a few isolated points, we examined whether physical motion information is involved in the induction of shoaling behavior. We consequently found that the presentation of virtual biological motion can clearly induce shoaling behavior, and have shown what aspects of this motion are critical in the induction of shoaling behavior. Motion and behavioral characteristics can be valuable in recognizing animal species, sex, and group members. Studies using biological motion stimuli will further enhance our understanding of how non-human animals extract and process information which is vital for their survival (Nakayasu & Watanabe, 2014).

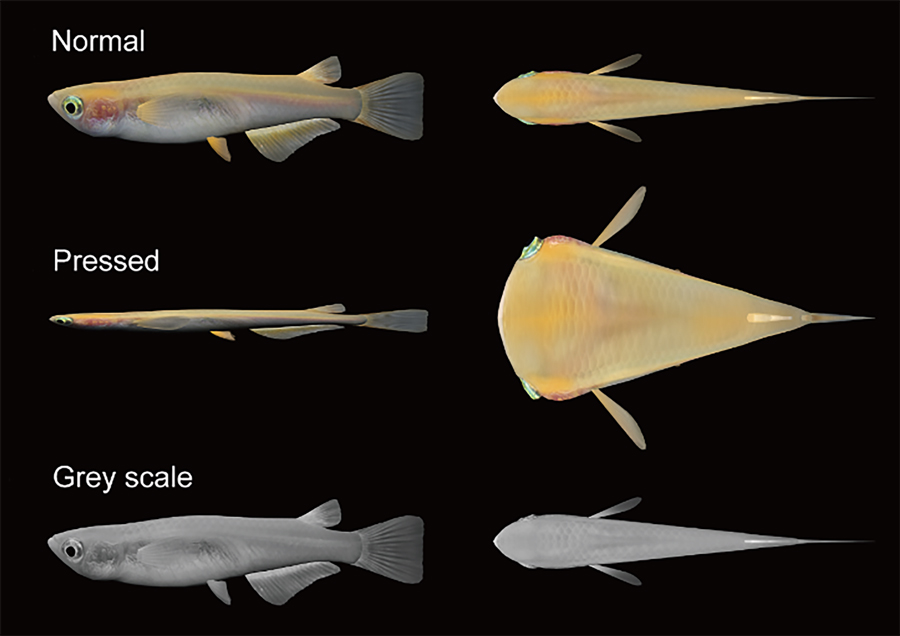

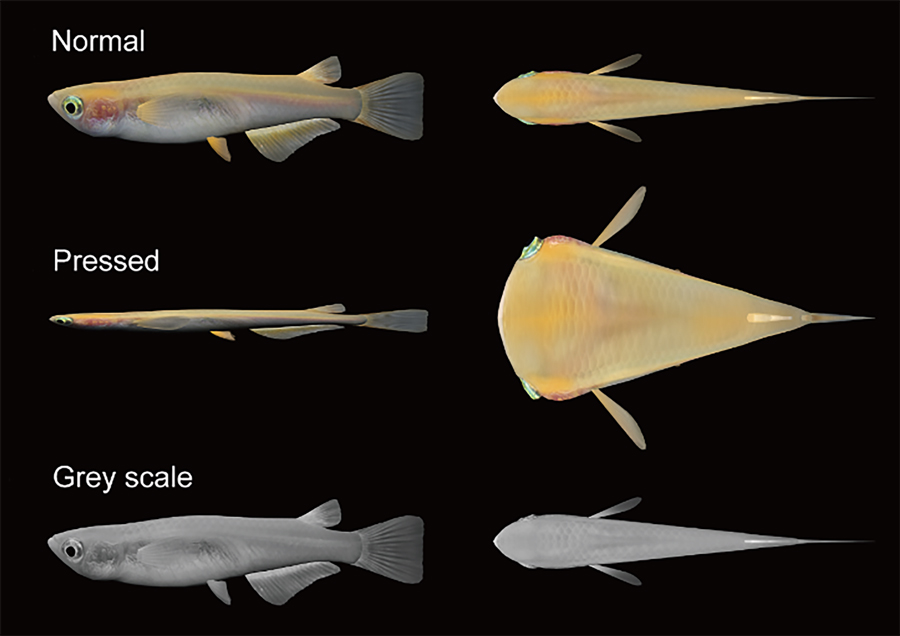

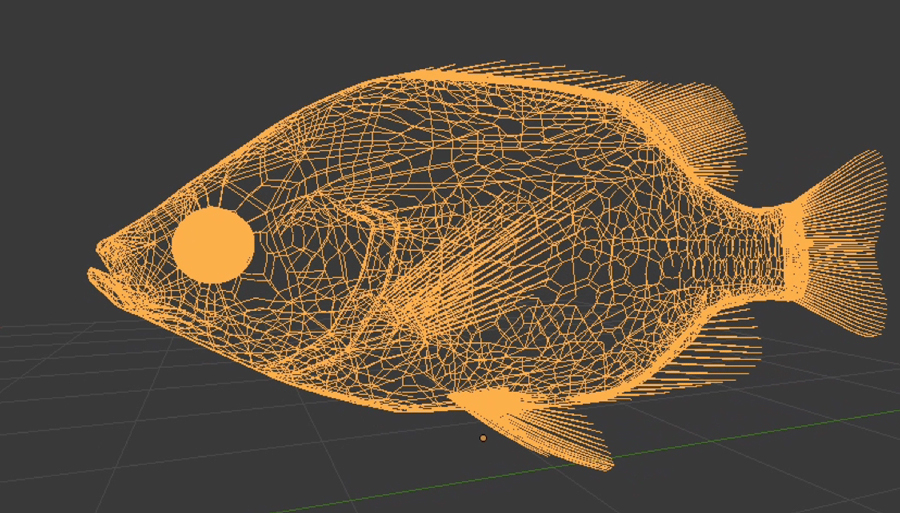

Additionally, we have developed a novel method for behavior analysis using 3D computer graphics (Nakayasu

et al., 2017). The fine control of various features of living fish has been difficult to achieve in studies of their behavior. However, computer graphics allow us to systematically manipulate morphological and motion cues. Therefore, we have constructed 3D computer graphic animations based on tracking coordinate data and photo data obtained from actual medaka (Figure 1). These virtual 3D models will allow us to represent medaka more faithfully as well as undertake a more detailed analysis of the properties of the visual stimuli that are critical for the induction of various behaviors. This experimental system was applied to studies on dynamic seasonal changes in color perception in medaka (Shimmura

et al., 2017), and on underwater imaging technology (Abe

et al., 2019 and Yamamoto

et al., 2019).

Figure 1. Virtual medaka fish.

Ⅱ. Psychophysical study of human & AI vision

Another of our major subjects is the psychophysical and theoretical studies of the visual illusions experienced by human and artificial intelligence (AI). One recent focus of this debate is the flash-lag effect, in which a moving object is perceived to head towards a flashed object when both objects are aligned in an actual physical space. We developed a simple conceptual model explaining the flash-lag effect (Watanabe

et al., 2010).

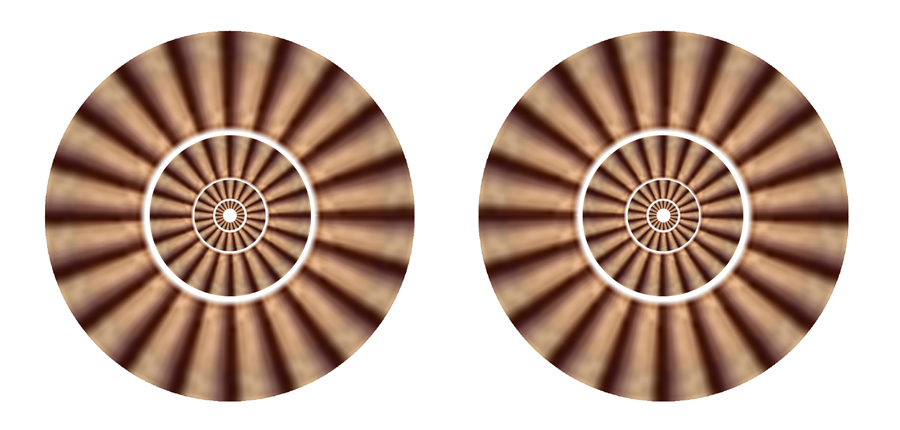

In recent years, we have made more developed novel visual illusions, such as the shelf-shadow illusion (3rd Award of the Illusion Contest), the Monstre Benham illusion (2nd Award), the Morning Monster Illusion (10

th Award), and the Genkikan Illusion (Figure 2).

Figure 2. Okazaki Genkikan.

Watanabe (NIBB) and Sinapayen (Sony CSL) got 2nd place at the 13th Visual Illusion Contest, for the Okazaki Genkikan Illusion. It is one of the Poggendorff illusions. (

http://www.psy.ritsumei.ac.jp/~akitaoka/sakkon/sakkon2021.html). It was found in Okazaki Genkikan Facility.

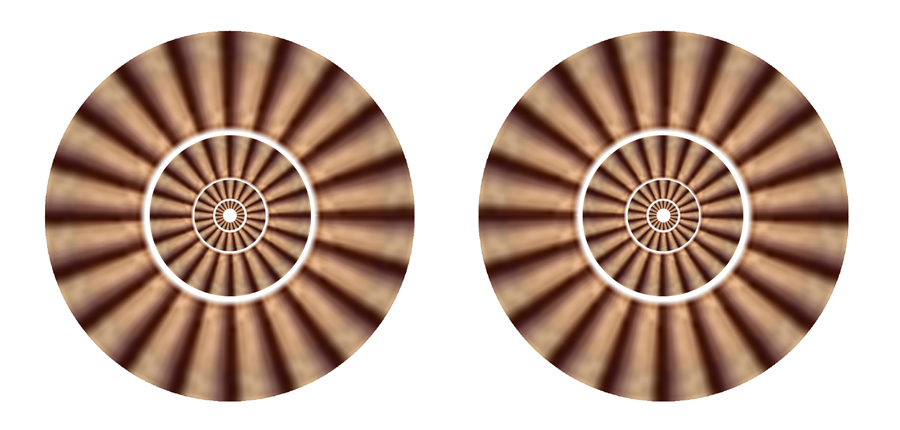

In 2018, we successfully generated deep neural networks (DNNs) that represent the perceived rotational motion for illusion images that were not physically moving, yet similar to what we experience in human visual perception. (Watanabe

et al., 2018). These DNN computer models will help to facilitate our future work on perception science. This experimental system was applied to studies on evolutionary illusion generator in collaborating with Dr. Lana Sinapayen (SONY CSL) (Evolutionary Generation of Visual Motion Illusions, https://arxiv.org/abs/2112.13243, Sinapayen and Watanabe, 2021). Currently, a variety of research is being conducted using these AI systems (

https://arxiv.org/abs/2106.12254).

In 2022, we examined the properties of the network using a set of 1500 images, including ordinary static images of paintings and photographs and images of various types of motion illusions (Visual Illusions Dataset, https://doi.org/10.6084/m9.figshare.9878663). Results showed that the networks clearly classified a group of illusory images and others and reproduced illusory motions against various types of illusions similar to human perception. Notably, the networks occasionally detected anomalous motion vectors, even in ordinally static images where humans were unable to perceive any illusory motion. Additionally, illusion-like designs with repeating patterns were generated using areas where anomalous vectors were detected, and psychophysical experiments were conducted, in which illusory motion perception in the generated designs was detected (Figure 3). The observed inaccuracy of the networks will provide useful information for further understanding information processing associated with human vision (Kobayashi

et al., 2022)

Figure 3. A visual illusions extracted by AI

Ⅲ. Ecological study of tactics in predators and prey.

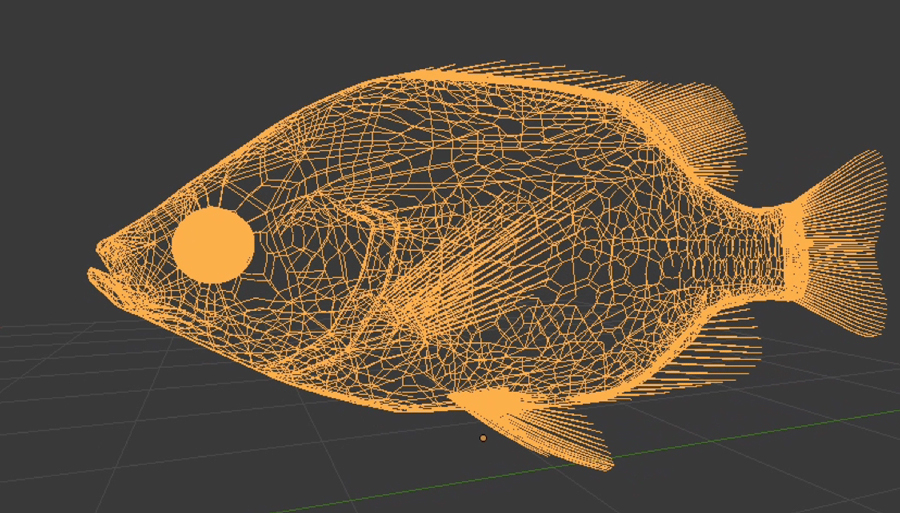

We are interested in behavioral interactions between predators and prey concerning the ecological aspect. In the process of the co-evolution, predators and prey have developed various tactics to overcome each other. To elucidate the sophistication of these tactics, we have examined the mechanisms and efficacies of predatory and antipredator behaviors of several animals such as fish, dragonflies, bats and birds. In addition, we have developed experimental system for those studies, which present interactive virtual predators to prey animals based on animation (Figure 4) or robotics. This experimental system consists of three modules as a sensing, a command generating and a virtual animal module. According to the spatial scale, the system employs appropriate technologies in each module. For example, in a small aquarium scale, we use high speed cameras as a sensing module and virtual reality animation as a virtual animal module. On the other hand, in a large outdoor scale, we use real-time GPS as a sensing module and unmanned aerial vehicle as a virtual animal module. Therefore this system are able to cover wider range of animals and expected to strongly support the study of animal interactions.

Figure 4. A computer animation of the virtual predatory fish.